Deep Retinal Vessel Segmentation For Ultra-Widefield Fundus Photography [project page]

Deep Retinal Vessel Segmentation For Ultra-Widefield Fundus Photography [project page]

We propose an annotation-efficient method for vessel segmentation in ultra-widefield (UWF) fundus photography (FP) that does not require de novo labeled ground truth. Our method utilizes concurrently captured UWF fluorescein angiography (FA) images and iterates between a multi-modal registration and a weakly-supervised learning step. We construct a new dataset to facilitate further work on this problem.

Deep Retinal Vessel Segmentation For Fluorescein Angiography [project page]

Deep Retinal Vessel Segmentation For Fluorescein Angiography [project page]

We propose a novel deep learning pipeline to detect retinal vessels in fluorescein angiography, a modality that has received limited attention in prior works, that reduces the effort required for generating labeled ground truth data. We release a new dataset to facilitate further research on this problem.

Large Scale Visual Data Analytics for Geospatial Applications [project page]

The widespread availability of high-resolution aerial imagery covering wide geographical areas is spurring a revolution in large scale visual data analytics. This project focuses on how analytics for wide-area motion imagery can be enhanced by incorporating information from geographical information systems enabled by pixel-accurate registration of vector roadmaps to the WAMI frames and exploitation of this information in vehicle tracking and 3D georegistration. The work highlights how computer vision applications are a fertile ground for incorporating machine learning and data science methodologies.

Fusing SfM and Lidar for Dense Accurate Depth Map Estimation [project page]

We present a novel framework for precisely estimating dense depth maps by combining 3D lidar scans with a set of uncalibrated camera RGB color images for the same scene. The approach is based on fusing structure from motion and lidar to precisely recover the transformation from 3D lidar space to 2D image plane. The 3D to 2D map is then utilized to estimate a dense depth map for each image. The framework does not require the relative position of lidar and camera to be fixed and the sensor can conveniently be deployed independently for data acquisition.

Point Cloud Analytics and Applications [project page]

Recent advances in the sensors used for lidar 3D scanners have substantially reduced their cost spurring an increase in the number of applications for which they are deployed. The work concentrates on point cloud analytics and its applications on different areas, e.g., humanities, sensor fusion, etc.

Local-Linear-Fitting-Based Matting for Joint Hole Filling and Depth Upsampling of RGB-D Images [project page]

We propose an approach for jointly filling holes and upsampling depth information for RGB-D images, where RGB color information is available at all pixel locations whereas depth information is only available at lower resolution and entirely missing in small regions referred to as “holes”.

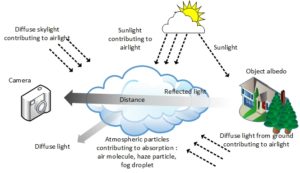

Hazerd: An Outdoor Scene Dataset and Benchmark for Single Image Dehazing [project page]

We provide a new dataset, HazeRD (Haze Realistic Dataset) for benchmarking dehazing algorithms under realistic haze conditions. HazeRD contains ten real outdoor scenes, for each of which five different weather conditions are simulated. All images are of high resolution, typically six to eight megapixels.

Visually Informed Multi-Pitch Analysis of String Ensembles [paper]

Multi-pitch analysis of polyphonic music requires estimating concurrent pitches (estimation) and organizing them into temporal streams according to their sound sources (streaming). This is challenging for approaches based on audio alone due to the polyphonic nature of the audio signals. Video of the performance, when available, can be useful to alleviate some of the difficulties.

See and Listen: Score-Informed Association of Sound Tracks to Players in Chamber Music Performance Videos [paper]

See and Listen: Score-Informed Association of Sound Tracks to Players in Chamber Music Performance Videos [paper]

Both audio and visual aspects of a musical performance, especially their association, are important for expressing players’ ideas and for engaging the audience. We present a framework for combining audio and video analyses of multi-instrument chamber music performances to associate players in the video to the individual separated instrument sources from the audio, in a score-informed fashion.

Selected Publications